- News and Stories

- Blog post

- Safety Net

Designing Chatbots That Improve the Experience of Accessing Benefits

When people arrive at the front door of government systems, they’re often in a state of vulnerability: needing food, health care, or financial support. And then, they encounter a chatbot.

If that chatbot feels cold, confusing, redundant, or unhelpful, it acts as yet another hurdle to jump over. We’ve seen firsthand that trust is easily eroded if a bot doesn’t work well, and that perception often extends beyond the tool to the states and agencies themselves.

But done right, chatbots are a promising tool for quickly and effectively supporting people navigating the safety net while potentially reducing call center volume and speeding up access to information. To build trust with clients, chatbots must be tailored to the right use cases, designed with accessible and human-centered flows, and actively account for points where people often get stuck. Trust doesn’t come from automation alone—it comes from thoughtful implementation.

When is a chatbot useful?

A chatbot is a good idea when people need fast, self-service answers to common questions—such as checking application status, eligibility criteria, required documents, or benefit disbursement dates. In our research, we found that people are often accessing government services on the go: in public transit, at the playground with their kids, or during breaks between jobs. Instead of calling a state office to ask when Summer EBT benefits will be loaded or what forms are needed for Medicaid renewal, a resident could use a chatbot to get immediate information any time of day. This improves access for people who need safety net services, especially those without time or flexibility to wait on hold or visit an office.

For administrators, it reduces repetitive inquiries and frees up staff to assist with more complex issues like appeals, case reviews, or service coordination—tasks where human support is essential. A chatbot could be like the waiting room to a doctor’s office: helping triage questions and concerns that could be easily answered so that more acute questions can be escalated accordingly. Chatbots can be a funnel towards in-depth support, and in the meantime function as a bridge to extend access, improve efficiency, and increase satisfaction across high-volume government programs.

What makes for a valuable chatbot flow?

For services that many individuals and families rely on, it’s important that chatbots use clear language, offer flexible navigation, and always provide a way to connect with a real person when needed. People can feel frustrated with chatbots when they can’t find clear or accurate answers—especially if the system doesn’t include up-to-date information or can’t handle personalized situations, like updating household income or understanding complex eligibility scenarios.

Good chatbot design leverages an approachable tone, plain language, and information hierarchy that is intuitive and visual (and prioritizes people’s top concerns). Designing a good chatbot isn’t just about plugging in answers, it’s about shaping a responsive conversation.

Tone also matters since a chatbot that sounds robotic or overly formal and bureaucratic can feel alienating. For chatbots within benefits programs, the focus should be on warm, direct phrasing that signals the bot is here to help, even proactively. For example, because categorical eligibility between programs like SNAP, Medicaid, and WIC means people are often eligible for multiple programs, a chatbot can connect the dots across agencies so that people receive more wraparound support.

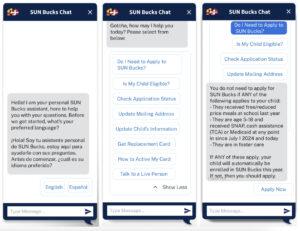

We recently worked with the State of Maryland on a chatbot designed to help families applying for Summer EBT. When families visited the Maryland Summer EBT site, the chatbot helped them identify whether they needed to apply for the program or not based on their streamlined certification status, update their mailing address, and deal with other self-service needs. When we designed this, we created a user-informed checklist for chatbots meant to enhance public benefit access:

- Start small with chatbot experiments—they don’t have to utilize generative AI at the beginning, and can function on practices like templated responses to common questions.

- Use clickable options to help guide people through complex eligibility or application questions, particularly if a chatbot isn’t using AI to start.

- Employ clear terminology and details of uncommon acronyms. Remember that the terms or acronyms that staff agency staff use internally might not be familiar to the people who need the program. We lean towards using the terminology that clients use, in order to maximize comprehension and approachability.

- Enhance the user experience with a logical flow of options and answers that map to the highest frequency questions/issues that customers come to the call center with.

Designing a good chatbot isn’t just about plugging in answers, it’s about shaping a responsive conversation.

Bridging automation and human support: New York’s live chat pilot

When we collaborated with the New York State Department of Health’s WIC program (Special Supplemental Nutrition Program for Women, Infants and Children), our initial goal was to introduce a live chat feature, but it quickly became clear that no single tool operates in isolation. In practice, chatbots, websites, and human agents all comprise a shared digital ecosystem—and people experience them as one unified service. This was evident when nearly half of users who interacted with Wanda, the WIC virtual assistant, believed they were beginning their WIC application. The disconnect between what the system could do and what users expected revealed a core design challenge: the need for a seamless bridge between automation and human support.

We piloted a hybrid model—layering live chat onto Wanda so people could self-serve for basic questions (like “Am I eligible?”) and escalate to a live agent for more complex needs. But applicants weren’t just seeking information; they were looking for action—confirmation, next steps, resolution. Because New York State administers a decentralized WIC program, state-level live chat agents lacked access to backend systems, limiting their ability to fully support clients and often requiring referrals to local agencies for resolution. Ultimately, we decided together with our government partners to invest in making the Wanda chatbot’s self-service pathways more robust, actionable, and aligned with user needs, rather than invest in a new live chat tool that offered limited additional value. This decision was in tandem with user-tested improvements to the website, so that the chatbot and online resources can work seamlessly together.

Piloting live chat in a rapid, iterative way—rather than scaling up a new tool across the state right away—allowed the Department of Health to be strategic and human-centered in where to invest technology and human resources in order to meet program participants’ biggest customer service needs.

For high-touch programs like WIC, successful chatbot design requires thinking beyond individual interactions. This process taught us the importance of three key things designers need to account for:

- Clearly communicate the chatbot’s capabilities: Chatbots used in government contexts often don’t reach the same level of sophistication as more widely known systems like Amazon’s Alexa. It’s important to ensure users understand what the chatbot can and can’t do to set appropriate expectations.

- Design for seamless escalation: Implement mechanisms that allow for smooth transitions from automated responses to human agents when necessary.

- Integrate backend systems: Where possible, connect chatbots and live agents to backend systems (such as a case management system) to enable more comprehensive support and reduce the need for bouncing the participant to yet another person, website, or support avenue.

Without these supports, automation risks feeling like a dead end—eroding trust rather than earning it.

Opportunities for scalability

As states look to improve their digital service delivery, there are opportunities to scale chatbot implementations. For states to be successful in this work, a thoughtful, phased approach is key. Starting with a non-generative AI chatbot can be an effective way to guide users through complex eligibility or application questions—especially when prompts are well-crafted and address the most common client concerns. Agencies might also consider launching small-scale AI chatbot pilots focused on narrow, well-defined use cases. These targeted experiments allow states to explore AI capabilities without requiring a full system overhaul, enabling gradual, evidence-informed improvements over time.

The work we’ve seen unfold in states like New York and Maryland shows that the potential for scaling chatbot technology in public benefits is real and achievable. By learning from these experiences and adapting them to their own contexts, other states can follow suit, improving access to essential services while reducing the burden on call centers and human staff. With the right strategies and a commitment to ongoing improvement, this kind of work can be replicated in many different regions, opening the door to more efficient, user-friendly public services nationwide.